Content

The Leadership Evolution

Leadership didn’t always come with the expectations we take for granted today. Two hundred years ago, you could’ve genuinely asked whether it was reasonable to expect leaders to understand human motivation. Now we’d call that table stakes.

I think we’re at the exact same moment with AI.

The next “basic competency”

In a few years, asking “Should founders know how to work with AI?” will sound as outdated as asking “Should a CEO know how to use email?” Not because everyone will suddenly become an AI expert, but because leadership expectations always expand to match the tools that shape work.

Email didn’t just make communication faster. It rewired coordination. It changed how decisions moved, how teams aligned, and how management actually happened inside a company. That kind of shift is why economists have long pointed to the “productivity paradox”: major technologies don’t automatically translate into big gains until organizations redesign how they operate. Paul David made this point with electricity in his classic “Computer and Dynamo” essay, showing that the real upside arrives when you rebuild processes around the new capability, not when you just plug it in. If you haven’t read it, it’s still one of the best reminders that tools don’t create leverage by themselves.

AI is a general-purpose technology moment. The tool is here. The leverage depends on leadership.

What “AI leadership” actually means

When I say “AI leadership,” I’m not talking about founders who can explain transformer architectures at dinner. I’m talking about founders who can direct artificial intelligence the way great managers direct people: clear intent, good constraints, tight feedback loops, and strong judgment.

That means getting comfortable managing AI agents and hybrid human–AI teams. It means designing workflows where models draft, humans decide, and the organization gets faster without getting sloppy.

This is why recruiting is already changing. Today, when I talk to candidates, I’m not only trying to understand what they can do. I’m trying to understand what they can do with AI. I’ll ask, plainly: what tools do you use, how do you use them, and where do you not trust them?

Because in practice, I’m not hiring “one person.” I’m hiring a person plus the system they operate.

The productivity jump is real, but it’s not magic

We should be honest about the numbers. “Five to ten times more productive” is a vibe many teams feel, but the best research doesn’t hand you a universal multiplier you can copy-paste into a pitch deck.

What the credible studies do show is that measurable lifts appear quickly when the work is well-scoped and the tool is embedded into the workflow.

In a Microsoft Research experiment on GitHub Copilot, developers completed a programming task 55.8% faster when using Copilot compared to a control group. That’s not theoretical: it’s time-to-completion on a real coding assignment.

In a Fortune 500 customer support setting, an NBER paper found generative AI assistance increased productivity by about 14% on average, with the biggest gains among less-experienced workers.

And in a large randomized field experiment across thousands of workers, another NBER paper found that generative AI shifted how people spent time at work, including reducing time spent on email for active users.

Those are not “AI will do your job for you” outcomes. They’re “AI will change the shape of the job” outcomes. Which is exactly why leadership matters more than demos.

Your new edge: judgment, strategy, and oversight

The rare skill isn’t technical. It’s judgment.

AI is very good at producing plausible output. Leadership is deciding what “good” means, when “fast” becomes “risky,” and where the organization needs a human to take responsibility.

This is the mental model I keep coming back to: great managers don’t do every task themselves. They decide which work to delegate, what “done” looks like, and how to spot problems early.

Great AI leaders do the same thing, except the teammate never sleeps and will confidently hand you something wrong if you don’t set the boundaries.

That’s why I don’t think the future belongs to the founder who knows the most prompts. It belongs to the founder who builds the best system of delegation.

Four practices I’m seeing the best founders adopt

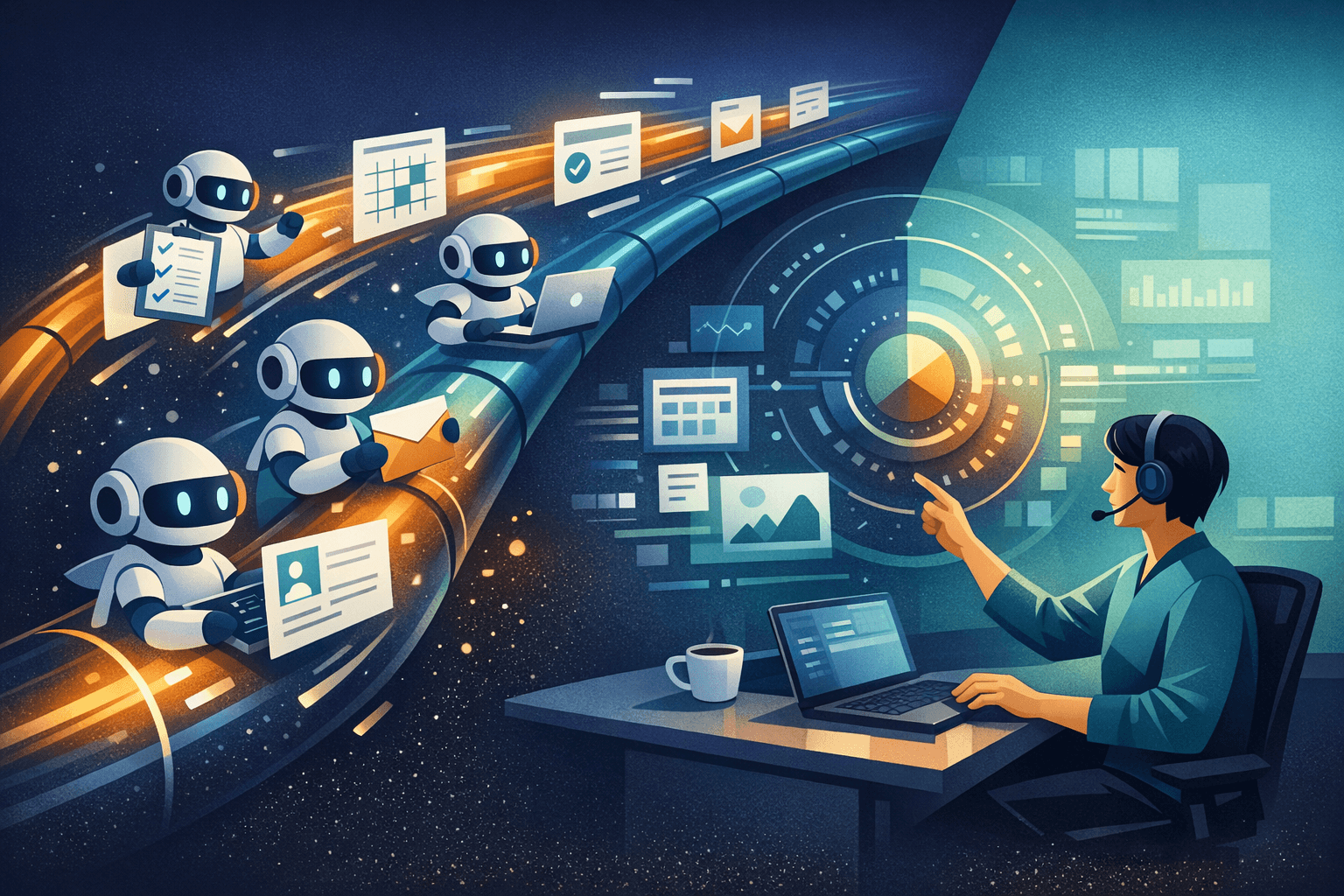

First, they make AI usage explicit. Not secret, not ad hoc, not “whatever you want.” They define which tasks are AI-drafted and which decisions require a human signature. They turn it into an operating system, not a personal hack.

Second, they instrument quality. They treat AI output like junior work: reviewed, tested, and measured. In software, this looks like small changes, strong CI, and meaningful tests. In content, it looks like source checking and consistent editorial standards. The DORA 2024 research is a useful warning here: AI can make developers feel faster while overall delivery performance can degrade if fundamentals slip.

Third, they redesign workflows instead of stapling AI onto old ones. This is the “productivity paradox” lesson in real life. If you keep the same meetings, the same vague ownership, and the same unclear specs, AI mostly produces more output-shaped noise. If you tighten the system, AI becomes leverage.

Fourth, they hire for AI fluency the way we once hired for Excel fluency. Not because spreadsheets are special, but because teams that can wield the tool consistently outperform teams that refuse.

The competitive gap is going to be brutal

Here’s what I believe: founders who learn to lead with AI early will feel like they’re running a company with unfair advantages.

Not because they’ll replace everyone with bots, but because they’ll compress cycles. They’ll go from idea to experiment to shipped result faster. They’ll keep small teams and still move like large ones. They’ll maintain startup agility while scaling output.

And the founders who resist will end up competing against organizations that can move dramatically faster at the same cost.

This isn’t speculation. It’s already happening in pockets. The only question is whether you build the muscle before your competitors do.

The question I’m asking myself (and you)

If AI leadership is becoming a core competency, then “Do we use AI?” is the wrong question.

The right question is: what are we using AI for, where do we insist on human judgment, and how do we build a system that gets better every week?

So I’ll end with the question I’m using to pressure-test my own team: what AI tools are you using today to amplify your team’s capabilities, and what would change if you treated that like a leadership skill, not a tech experiment?