Content

AI Is an Intern, Not a Senior Consultant

The AI hype cycle has been so loud lately that it’s messing with people’s expectations. Every week there’s a new tool claiming it can replace your whole team if you “just delegate everything” and step out of the way.

That’s not just optimistic. It’s dangerous.

The most useful mental model I’ve found is simple: treat AI like a smart intern, not a senior consultant.

Stop hiring “senior AI” in your head

If you had a talented intern starting Monday, you wouldn’t hand them your client relationships, your roadmap, and your production systems and say, “Good luck, see you in a month.” You’d give them a tight scope, clear context, examples of what good looks like, and you’d review what they ship.

But that’s exactly how a lot of founders are trying to use AI. They expect one prompt to become strategy, execution, and perfect outcomes.

When it inevitably falls short, the conclusion becomes: “AI isn’t that useful.” The real issue is the job description you invented for it.

The human-in-the-loop part isn’t optional

In practice, “human-in-the-loop” isn’t a cute best practice. It’s the difference between leverage and liability.

Whenever you ask an AI to do something that matters, you need a review gate. Not a vibe check. A real moment where you verify the output against reality, context, and risk.

The moment you build agents that can act in tools, this gets even more important. You want least-privilege access, because the best way to avoid catastrophic mistakes is to make them impossible. If the agent can’t access your sensitive systems, it can’t leak them. If it can’t push to production, it can’t ship garbage at 2 a.m.

And if your agent reads anything from the outside world, you have to assume it can be manipulated. Prompt injection is basically social engineering for models: malicious instructions hidden in “normal” content that try to redirect the assistant into doing something you didn’t intend. You don’t solve this with trust. You solve it with design: constrained tools, controlled inputs, explicit policies, and a mindset that treats untrusted text like untrusted code.

Ground the intern in real sources, not vibes

One reason AI feels magical is also why it breaks: it’s happy to produce a coherent answer even when the answer shouldn’t exist.

If the output needs to be factual, you can’t rely on raw generation. You have to ground it in verified sources. That’s where retrieval (RAG) matters: you give the model access to the right documents and you force it to build on that, instead of guessing.

It’s the same with an intern. If you want them to write a proposal, you don’t say “be persuasive.” You give them past proposals, the client context, pricing constraints, and the non-negotiables.

Coding is where this metaphor becomes obvious

I do most of my coding with AI agents now. That doesn’t make me less of an engineer. It makes me more effective.

I’m not spending my time on syntax and boilerplate. I’m spending it where I’m actually hard to replace: shaping the architecture, defining the constraints, reviewing trade-offs, and making the judgment calls.

AI is great at the grunt work. It can generate functions, refactor repetitive patterns, translate code, draft tests, and help you explore approaches fast.

But it’s still weak at architecture. It doesn’t carry your system’s history in its bones. It doesn’t feel the long-term maintenance cost of a shortcut. It doesn’t have the product intuition that tells you which edge case will become a support nightmare.

That’s why the supervision stack matters. AI-written code should go through the same rigor you’d apply to any junior contributor: tests that actually run, linters that enforce consistency, and human review that checks correctness, security, and intent. The goal isn’t to babysit a robot. The goal is to keep quality high while you move faster.

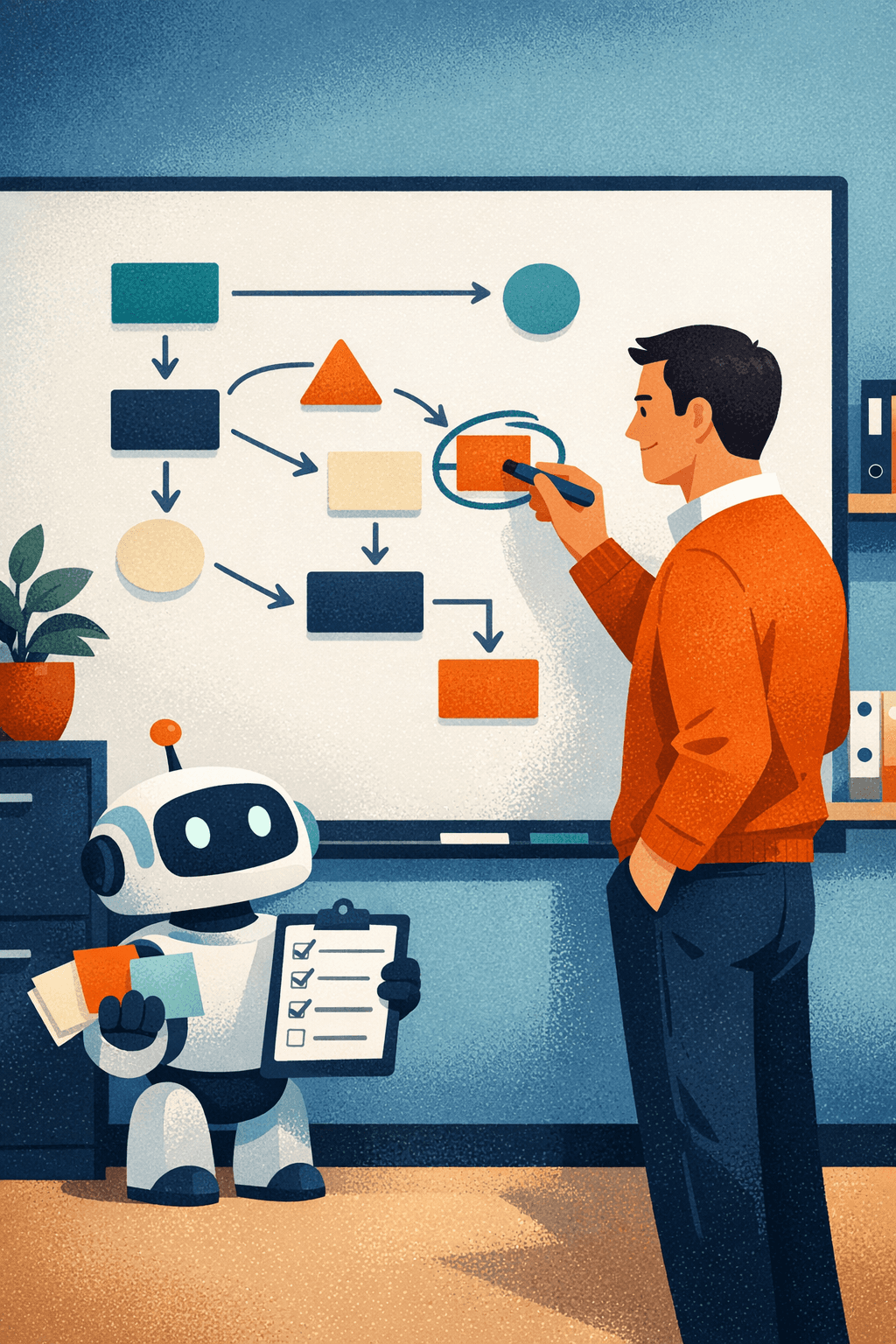

What AI can’t replace is the thing you’re paid for

The value you bring to your business isn’t mechanical execution. It’s judgment.

AI can draft a proposal, but it can’t decide which clients you should pursue and why. AI can summarize your notes, but it can’t set your priorities in a way that matches your goals and appetite for risk. AI can write an email, but it can’t build a relationship.

That part is still human, and it will stay human longer than the marketing decks want to admit.

So what should you actually do?

Stop shopping for tools that promise to replace you.

Start building a workflow that makes you better at being you.

Give the AI the boring work, but keep your hands on the steering wheel. Add review gates. Ground outputs in sources you trust. Restrict permissions. Assume untrusted inputs can be hostile. Treat “agentic” systems like you’d treat any powerful automation: with governance, observability, and clear limits.

The goal isn’t to work less. It’s to work on the right things.